Plugins system

Unlike AI frameworks, Agiflow aims to support your development of AI applications seamlessly while ensuring that you adhere to all privacy, security, and quality checks before and after production through our plugin systems.

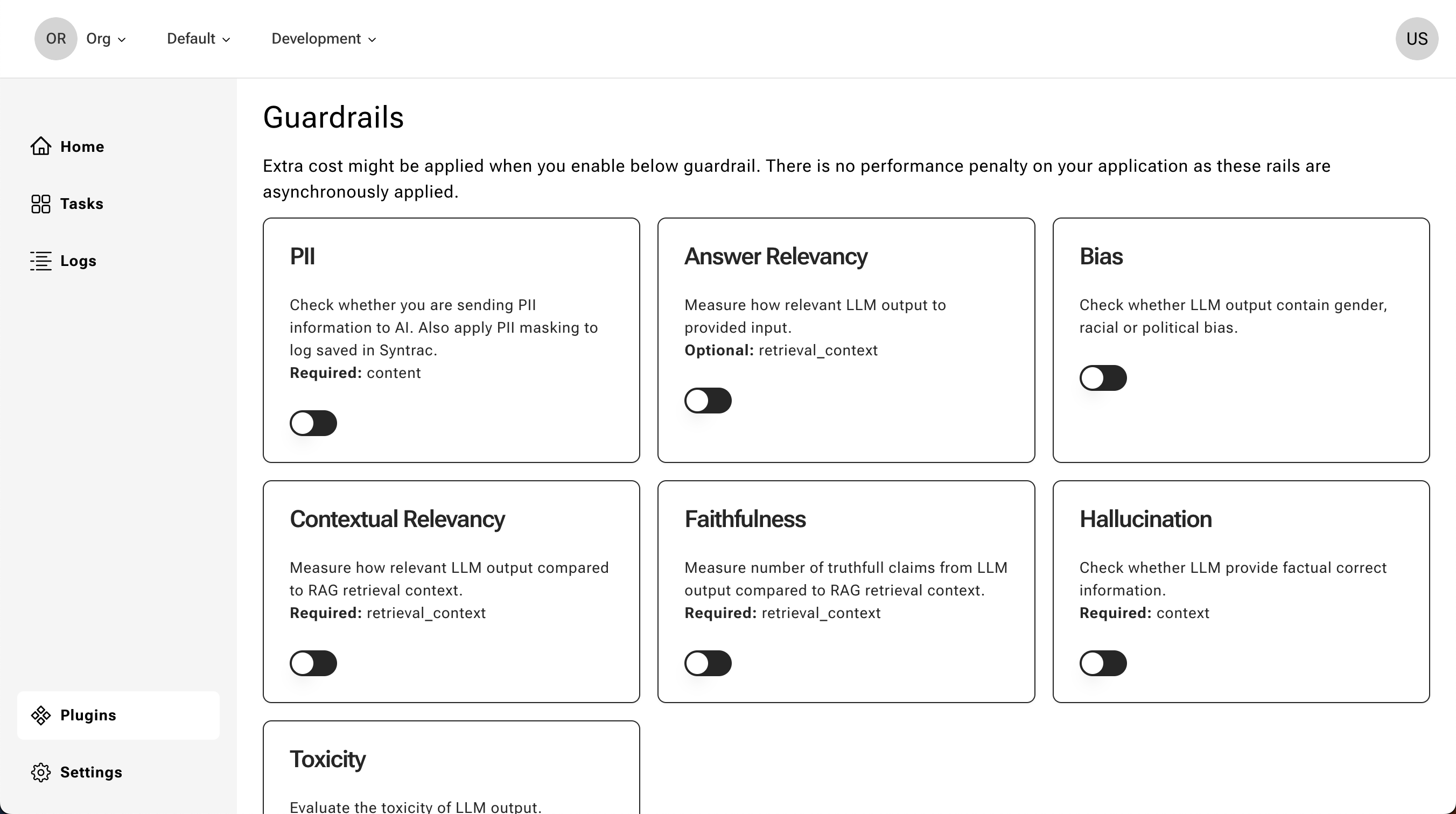

Guardrails

Guardrails play a critical role in operationalizing AI applications; nonetheless, not all guardrails need to be executed synchronously within the AI workflow.

Agiflow simplifies the process for companies to delegate certain guardrail checks, thus enhancing the speed of their AI applications while ensuring compliance and reporting standards are upheld. With just a click of the Switch button, your system's quality is brought to the next level.

Besides ensuring quality of your end-application, these guardrails output are also used to give user contextual information on how to provide high quality feedback. Here are some guardrails we're currently support (you don't need to provide those data as they are automatically collect with telemetry):

PII

To verify whether you inadvertently transmit PII customer data to AI providers, simply activate this option. Additionally, we mask your customer PII data before logging telemetry data into our database.

Answer Relevancy

Wondering how relevant LLM output from your prompt to provided input from customer. This guardrail make it easy for you to get that insight.

Data required:

- Input

- Actual Output

Bias

If gender, racial and political bias check is important to your application, simply enable this guardrail.

Data required:

- Input

- Actual Output

Toxicity

Cross check toxicity level of your LLM output with other LLM.

Data required:

- Input

- Actual Output

Contextual Relevancy

Having a RAG system to retrieve information and wondering if your LLM's output is relevant to retrieved information, just enable this guardrail.

Data required:

- Input

- Actual Output

- Retrieval Context

Faithfulness

Similar to Contextual Relevancy, but measuring fruitfull claims from LLM instead.

Data required:

- Input

- Actual Output

- Retrieval Context

Hallucination

Wondering if your LLM provide factual correct information, simply enable this guardrail.

Data required:

- Input

- Actual Output

- Context

Eliminate guesswork and accelerate AI iteration

You can seamlessly integrate Agiflow from development environments to production. We facilitate easy testing of your AI enhancements across various environments using real data, enabling you to confidently deploy new releases with improved results in less time.